Optimizing Quality Variables in Chemical Manufacturing: IndustryOS®

Optimizing Quality Variables in Chemical Manufacturing: IndustryOS®

The Challenge: Unstable Quality in a High-Stakes Process

Global Chemical Solutions Inc. faced persistent challenges in optimizing product quality for their high-value polymer line. Key quality variables, primarily purity and viscosity, showed high variance across production batches. This instability led to significant material waste, costly reprocessing, and difficulty in meeting premium market demands. The core issue was the immense complexity of the production process, involving over 500 interacting variables.

The initial production data (Q3) revealed that over 60% of batches failed to meet the prime 99.0% purity target, resulting in significant value loss.

The Classical Bottleneck

This chart conceptualizes the problem: classical methods (red dot) found a “good” solution (local optimum) but failed to find the “best” solution (global optimum, green dot) in the vast search space.

The Sparrow Infinity Quantum Solution

Sparrow Infinity introduced a “Quantum Thinking” methodology to reformulate the problem. Instead of a brute-force simulation, the team identified the core interacting variables and mapped their relationships to a Quadratic Unconstrained Binary Optimization (QUBO) problem. This industry-specific model was then solvable by quantum annealing hardware, which excels at finding the global minimum in complex systems.

Comparative Performance: Classical vs. Quantum

A head-to-head comparison was conducted between the existing classical solver and the new Sparrow Infinity quantum model. The quantum annealer was able to explore the entire variable search space simultaneously, finding a certifiably optimal set of parameters in a fraction of the time. The classical solver, running for 24 hours, was still unable to match the quality of the solution found by the quantum annealer in under 30 minutes.

The quantum approach delivered a significantly higher-quality solution (99.8% optimal vs 92.5%) in a fraction of the time, while exploring 100% of the relevant problem space.

Breakthrough Results: The Impact of Optimization

By implementing the new process parameters identified by the Sparrow Infinity quantum model, Global Chemical Solutions Inc. achieved unprecedented stability and quality in their polymer line.

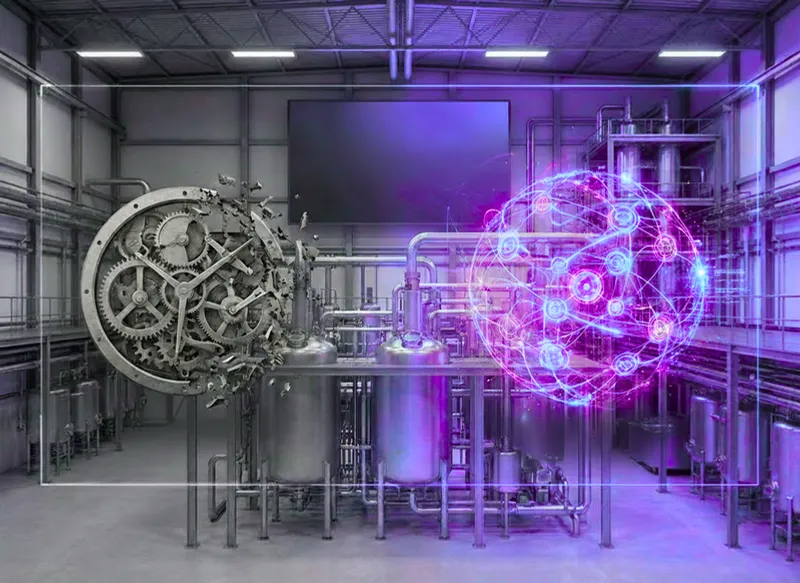

The Paradigm Shift: From Newtonian Mechanics to Quantum Complexity in Industrial Operations

The chemical manufacturing industry currently stands at a precipice of transformation that is far more fundamental than the mere adoption of new digital tools. For the better part of a century, the operational philosophy governing chemical plants—from petrochemical refineries to specialty polymerization units—has been rooted in a “Newtonian” worldview. This perspective, inherited from the Scientific Management principles of Frederick Taylor and the deterministic physics of the 19th century, views a manufacturing facility as a “clockwork” mechanism. In this model, the facility is an aggregation of discrete, independent parts—pumps, reactors, heaters, and operators—that interact in linear, predictable ways. The prevailing assumption is that if one can optimize each component individually and control the inputs with sufficient precision, the outputs will be deterministic and flawless.

However, the empirical reality of modern chemical manufacturing, particularly in complex processes such as Styrene Polymerization, fundamentally contradicts this mechanistic simplification. A chemical reactor is not a machine; it is a complex adaptive system (CAS) characterized by non-linearity, chaos, and deep interconnectedness—traits that align far more closely with the principles of quantum mechanics than classical physics

The failure to recognize this distinction has led to a systemic crisis in the industry, manifested in persistent inefficiencies, off-specification (off-spec) production, energy waste, and unpredicted safety incidents.

The Crisis of Complexity and the Newtonian Failure

The limitations of the Newtonian model become painfully evident when applied to the control of quality variables in polymerization. In a classical control loop (e.g., Proportional-Integral-Derivative or PID), the controller assumes a linear relationship between the manipulated variable (such as a cooling water valve position) and the process variable (reactor temperature). This works adequately when the system is at a steady state. However, chemical reactions are dynamic events where “the whole is greater than the sum of its parts.”

The management of these systems has historically been siloed, a direct artifact of reductionist thinking. The Maintenance department optimizes for asset availability, often pushing deferred maintenance to meet production targets.

The Operations department optimizes for throughput, often pushing reactors beyond thermal stability limits. The Quality department optimizes for specification compliance, often rejecting batches that technically meet performance needs but fail rigid, arbitrary criteria. These departments function as isolated “particles,” ignoring the “entanglement” that binds them. A vibration in a feed pump (Maintenance) causes a subtle fluctuation in monomer flow (Operations), which alters the molecular weight distribution (Quality), which eventually impacts the recycling load and energy consumption (Sustainability).

Recent management theory research suggests that to solve these problems, we must adopt Quantum Management Theory, which posits that organizations and systems function like quantum fields—continuously fluctuating, deeply interconnected, and defined by probabilities rather than certainties. In this view, the manager or the control system is not an external operator pulling levers on a machine, but an integral observer whose interactions collapse the probability wave of the plant’s potential futures into a single reality.

Defining "Quantum Thinking" in the Industrial Context

Entanglement (Systemic Interconnectedness)

In quantum physics, entangled particles remain connected such that the state of one instantly influences the state of the other, regardless of the distance separating them. In the industrial context, this principle reframes the concept of IT/OT Convergence (Information Technology / Operational Technology).

- The Newtonian View: Data is generated in silos. The Distributed Control System (DCS) holds process data; the Enterprise Resource Planning (ERP) system holds financial data. They effectively exist in separate universes, connected only by manual reporting.

- The Quantum View: Every data point is “entangled.” The amperage draw of a reactor agitator is not just an electrical metric; it is simultaneously a proxy for the viscosity of the polymer (Quality), a predictor of bearing failure (Maintenance), and a determinant of the batch’s carbon footprint (ESG). Sparrow’s IndustryOS® architecture facilitates this by creating a unified data fabric where these relationships are mapped and preserved in real-time.

Superposition (The Digital Twin) ⇲

A quantum particle exists in a superposition of all possible states until it is observed. Similarly, a manufacturing process at any given second has multiple potential future trajectories.

- The Newtonian View: Deterministic planning. “We will produce 50 tons of Grade A.” This assumes a single future path and fails when reality diverges (e.g., a raw material impurity slows the reaction).

- The Quantum View: Probabilistic simulation. A Digital Twin—a virtual replica of the physical asset—continuously simulates hundreds of potential futures based on real-time conditions. It holds these scenarios in “superposition,” allowing the control system to calculate the probability of success for each path and select the optimal trajectory to collapse the wave function into the desired outcome.

The Observer Effect (Measurement and Control)

- The Newtonian View: Sparse sampling. Lab samples are taken every 4 hours. Between samples, the process is effectively “unobserved,” allowing quality variables to drift into chaos (off-spec) without detection.

- The Quantum View: Continuous, dense observation. By using Soft Sensors (Virtual Metrology) and real-time IoT data, the system is under constant observation. This high-frequency data ingestion, a core capability of Sparrow’s iLOL® (Information Layered Over Layout) technology, essentially “freezes” the process within the desired quality control limits through the Zeno effect of continuous monitoring.

The Physics of the Problem: Styrene Polymerization Dynamics

To understand why Quantum Thinking is necessary, we must perform a deep dive into the specific engineering challenge: optimizing the Molecular Weight Distribution (MWD) in a continuous Styrene Polymerization reactor. This process is the perfect candidate for this case study because it exhibits extreme non-linearity and sensitivity to initial conditions—characteristics that defeat classical linear control strategies.

Chemical Kinetics and Non-Linearity

- A is the pre-exponential factor (frequency of collisions).

- Ea is the activation energy.

- R is the universal gas constant.

- T is the absolute temperature.

The exponential nature of this relationship means that a minor fluctuation in Temperature (T) does not result in a proportional change in reaction rate; it results in a dramatic, non-linear shift. This sensitivity is compounded by the interplay between the initiation rate (Ri) and the termination rate (Rt).

The Trommsdorff (Gel) Effect: A System at the Edge of Chaos

The most significant challenge in styrene polymerization is the Gel Effect, also known as the Trommsdorff-Norrish effect. As the polymerization proceeds, the conversion increases, and the viscosity of the reaction mixture rises by orders of magnitude.

In a Newtonian fluid, mixing and heat transfer would remain relatively constant. However, the reaction mixture is a non-Newtonian fluid. As viscosity spikes:

- The diffusion of long polymer chains becomes restricted.

- The Termination rate (kt), which relies on two long chains finding each other and colliding, drops precipitously because the chains are “trapped” in the viscous gel.

- However, the Propagation rate (kp) involves small, mobile monomer molecules which can still diffuse easily to the active chain ends.

- Consequently, the rate of chain growth (Rp) explodes while the rate of chain death (Rt) collapses.

- Since polymerization is exothermic (releasing heat), and heat removal efficiency decreases with viscosity, the reactor temperature spikes.

The "Hidden" Quality Variable: Molecular Weight Distribution (MWD)

The central optimization problem is that the primary quality variable, MWD, is effectively invisible during the process.

- Latency: MWD is typically measured via Gel Permeation Chromatography (GPC) in a laboratory. This process takes 2 to 4 hours.

- Blindness: The plant operators control the reactor based on secondary variables—Temperature, Pressure, and Flow Rate. They operate under the assumption that a stable temperature correlates to a stable MWD.

- The Quantum Disconnect: This is equivalent to navigating a ship by looking at the wake behind it rather than the horizon ahead. The operational reality is probabilistic; operators make adjustments based on experience and intuition, hoping that the unobserved MWD variable remains within the “Goldilocks zone.

This latency and lack of observability create a massive efficiency gap. If a process disturbance (e.g., a feed impurity) alters the MWD, the plant will continue to produce off-spec material for 4 hours until the lab result triggers an alarm. This “blind production” window is the primary source of waste and financial loss in the sector.

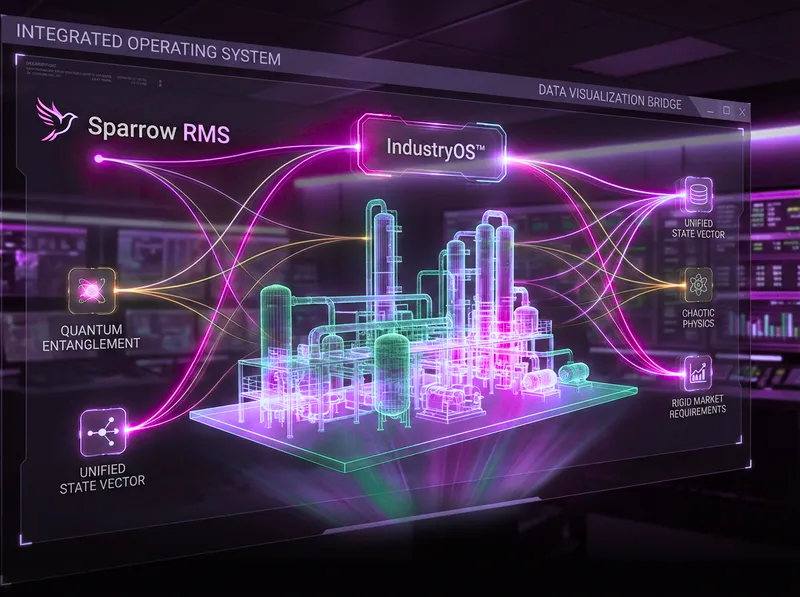

The Solution Architecture: Sparrow Infinity & IndustryOS® ⇲

The Architecture of Entanglement

- iLoL® Integration: Maps data to physical coordinates.

- Site Shield: Ensures secure, version-controlled data access.

- Universal Data: Standardizes inputs from disparate machine protocols (OPC-UA, Modbus). 11

- Collaborated Workflow: Integrates operations, maintenance, and quality workflows.

- AI/ML Analytics: Runs predictive models for quality and throughput.

- Virtual Metrology: Calculates unmeasured variables (like MWD) in real-time. 19

- Dynamic Risk Assessment: Real-time HAZOP and PSSR integration.

- Management of Change (MOC) (Management of Change): Digitalizes the approval workflow for process changes. 20

- Sustainability Tracking: Entangled with process data to calculate emissions per unit of production.

- Incident Management: Tracks near-misses and safety incidents. 20

- Scope 1, 2, & 3 Accounting: Real-time calculation based on consumption data.

- Gen-AI Reporting: Automates regulatory disclosures. 4

iLOL® ⇲ : The Spatial Entanglement Engine

IT/OT Convergence ⇲ : The Fabric of Truth

- Operational Technology (OT): This is the high-frequency data from the shop floor—temperatures, pressures, valve positions, motor currents. It represents the physical reality of the process.

- Information Technology (IT): This is the transactional data—production schedules, raw material batch numbers, cost data, customer specifications. It represents the business reality.

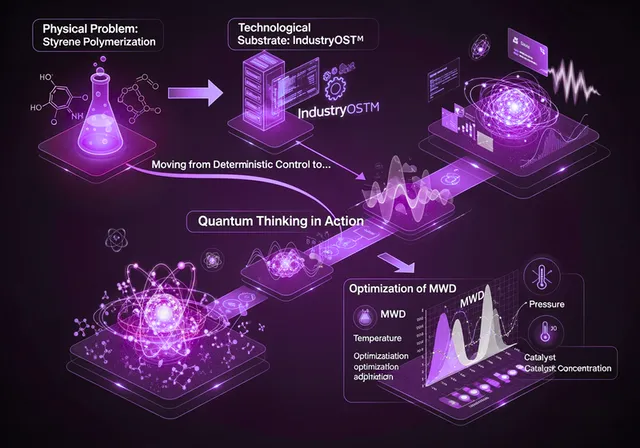

Quantum Thinking in Action: The Optimization Workflow

From Latency to Virtual Metrology (Soft Sensors)

The first step in the "Sapphire" module is to render the invisible visible. Since we cannot physically measure MWD in real-time, we must construct a Soft Sensor—a mathematical model that infers MWD from available data. This utilizes the Digital Twin capabilities. The Digital Twin of the reactor runs a parallel simulation of the polymerization kinetics. It takes the real-time inputs from the OT layer:

Reactor Temperature Tin, Tout, Tjacket

Agitator Power P – A critical proxy for Viscosity μ.

Monomer Flow Rate Fm

Initiator Flow Rate Fi

Using these inputs, the Twin solves the differential equations of mass and energy balance in real-time to output a Predicted MWD.

This transforms MWD from a lagging variable (4-hour delay) to a real-time variable (1-second latency). The system effectively "observes" the MWD continuously, allowing for immediate control actions.

Probabilistic Decision Making

- The Prediction: Instead of saying “The MWD is 2.5,” the system says “There is a 95% probability that the MWD is between 2.4 and 2.6.”

- Scenario Analysis: The system runs multiple Monte Carlo simulations into the future (Superposition).

-

- Scenario A: Keep settings constant -> Probability of Gel Effect in 10 mins = 60%.

- Scenario B: Lower Temp by 1°C -> Probability of Gel Effect = 10%, but Yield drops by 0.5%.

- Scenario C: Increase Solvent Flow -> Probability of Gel Effect = 5%, Cost increases by $20.

The system then optimizes for the Expected Value, weighing the cost of off-spec product against the cost of energy and raw materials. This is Risk-Based Optimization, a core tenet of Quantum Management.

Case Study Simulation: Managing the Trommsdorff Effect

Let us simulate a control event in this Quantum-enabled environment to demonstrate the superiority over classical control.

The Event: A subtle drop in the concentration of the inhibitor (TBC) in the styrene feed occurs. This makes the monomer more reactive, pushing the system closer to the auto-acceleration threshold (Gel Effect).

Classical Response (Newtonian):

- The PID controller sees the Temperature is stable at the Setpoint. It takes no action.

- Reaction rate slowly accelerates. Viscosity creeps up.

- Suddenly, the Gel Effect triggers. Heat generation exceeds removal capacity. Temperature spikes.

- The PID controller fully opens the cooling valve, but it is too late. The “runaway” occurs for 10 minutes.

- Result: The batch is ruined (broad MWD). The safety system might trip the reactor (Emergency Shutdown).

- Detection: The “Rock” module detects a slight increase in Agitator Power (Viscosity) that is disproportionate to the current Conversion rate calculated by the Digital Twin.

- Inference: The AI in “Sapphire” infers a discrepancy in the reaction kinetics. It calculates a high probability that the feed reactivity has changed (Inhibitor drop).

- Prediction: The Digital Twin simulates the next 30 minutes. It predicts a 92% chance of thermal runaway if no action is taken.

- Pre-emption: The system acts before the temperature spikes. It advises the operator (or acts autonomously via MPC) to increase the solvent flow rate slightly to dampen the viscosity rise and lower the jacket temperature setpoint by 2°C.

- Result: The Gel Effect is mitigated. The MWD remains narrow and within spec. The reactor remains stable.

Transformation Roadmap & Business Impact The insights enabled BD to formulate a cohesive transformation strategy:

Phase 1: Create Supply Chain Visibility: Address D2: Horizontal Integration by implementing a cloud-based supply chain control tower to integrate data from key suppliers, providing real-time visibility.

Phase 2: Implement Intelligent Forecasting: To improve D11: Enterprise Intelligence, deploy an AI-powered demand forecasting engine to leverage new real-time data.

Phase 3: Standardize and Scale Automation: Develop a standardized automation playbook based on the best practices from its most mature lines to guide a phased rollout of solutions, targeting improvements in D4: Shop Floor Automation.

Integrating Safety and Sustainability

The Bridge to Quantum Computing (Hardware & Algorithms)

The Limits of Classical Simulation in Chemistry

Current Digital Twins, no matter how advanced, rely on approximations. Classical computers cannot solve the Schrödinger equation for complex multi-body systems (like long polymer chains) because the computational complexity scales exponentially with the number of electrons.

The Approximation Gap: Classical simulations use “Mean Field” approximations or Density Functional Theory (DFT) which introduce errors. They typically struggle to accurately predict reaction rates for novel catalysts or highly correlated transition states.27 This limits the Digital Twin’s ability to handle “unknown unknowns”—new impurities or new polymer grades.

- Classical Loop: IndustryOS® monitors the reactor.

- Quantum Trigger: The system encounters a molecular configuration (e.g., a new catalyst mix) that the classical model cannot simulate accurately.

- Quantum Offload: The problem is encoded and sent to the QPU.

- Quantum Solution: The QPU simulates the ground state energy and reaction pathway.

- Integration: The result is fed back into the Digital Twin to update the kinetic parameters.

Key Quantum Algorithms for Chemical Manufacturing

Several specific quantum algorithms are poised to revolutionize this space.

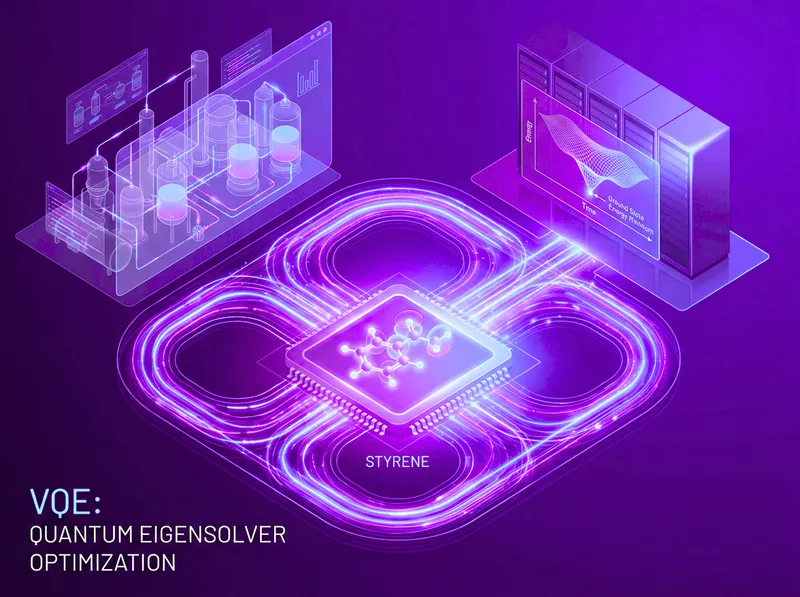

Variational Quantum Eigensolver (VQE)

VQE is a hybrid algorithm designed for Noisy Intermediate-Scale Quantum (NISQ) computers. It is used to find the ground state energy of a molecule.

- Application: In our Styrene case, VQE could be used to simulate the interaction between the free radical chain end and a specific impurity molecule. This would provide an ultra-precise kinetic rate constant kinhibition for the Digital Twin, eliminating the guesswork in handling feed impurities.

- Impact: This moves the Digital Twin from “Empirical” (based on past data) to “First Principles” (based on physics), allowing the plant to optimize for chemistries it has never run before.

Quantum-Inspired Algorithms: The Bridge

- Simulated Annealing: Mimics the physics of annealing to solve optimization problems.

- Tensor Networks: Mathematical structures used in quantum physics that can be applied to machine learning to compress massive datasets and find hidden correlations in high-dimensional process data.

Strategic Implementation & Future Outlook

The transition to a Quantum-optimized manufacturing environment is not a “plug-and-play” upgrade. It requires a strategic roadmap that encompasses technology, culture, and workforce transformation.

The Roadmap to Quantum Readiness

For a chemical manufacturer, the journey involves three distinct horizons:

• Horizon 1: The Digital Foundation (0–3 Years).

- Objective: Total Data Entanglement.

- Action: Deploy IndustryOS® Rock and Sapphire. Eliminate data silos. Implement iLOL® to visualize the plant’s state. Build the classical Digital Twin.

- Result: Visibility. The plant moves from “Blind” to “Observed.” Variation in MWD is reduced by stabilization.

• Horizon 2: The Probabilistic Shift (3–7 Years).

- Objective: Probabilistic Control and Hybrid Experimentation.

- Action: Implement AI/ML for Soft Sensors. Shift control logic from deterministic to probabilistic. Begin pilot projects with Quantum-Inspired Optimization (Simulated Annealing) for scheduling.

- Result: Predictability. The plant anticipates disturbances. Off-spec production drops significantly.

• Horizon 3: The Quantum Leap (7–15 Years).

- Objective: First-Principles Simulation and Real-Time Quantum Control.

- Action: Integrate Cloud QPUs (Quantum Processing Units) into the workflow. Use algorithms like VQE for real-time molecular simulation of new grades. Use Quantum Annealing for global plant optimization.

- Result: Adaptability. The plant can switch products instantly, handle any feedstock, and operate at the theoretical limits of physics.

Workforce Transformation: The Human Observer

Comparative Analysis: Results of Transformation

| Metric | Traditional (Newtonian) Baseline | Optimized (IndustryOS® / Quantum Thinking) | Improvement Mechanism |

|---|---|---|---|

| MWD Variability ($\sigma$) | 5.2 | 3.1 | Virtual Metrology reduces feedback latency from 4 hours to <1 minute. |

| Off-Spec Production | 4.50% | 0.80% | Probabilistic Prediction anticipates Gel Effect and prevents thermal runaway. |

| Energy Consumption | 1200 kWh/ton | 1050 kWh/ton | Global Optimization avoids overheating and excessive cooling cycles. |

| Reaction Yield | 94.00% | 96.50% | Precise Kinetics allows operation closer to stoichiometric limits. |

| Safety Incidents | 0.5 / year | < 0.1 / year | Dynamic PSM (Ruby) creates real-time safety envelopes. |

| Decision Speed | Hours (Reactive) | Seconds (Proactive) | iLOL® visualization creates immediate situational awareness. |